Many software engineers are familiar with the usage of special comment “tags” that can be added to their code comments, for use in searches, automated task tracking, and so forth. Some of the most popular are FIXME, TODO, UNDONE, and HACK. But what about others? I’m sure plenty of people have developed other tags of good general utility that deserve to be shared.

So here are a few additional tags that I’ve adopted over the years. Some are useful standalone, others are most effectively used when combined with more common tags (like FIXME).

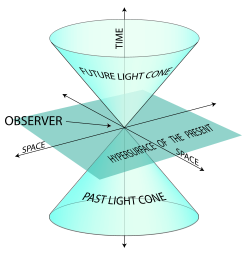

YOUAREHERE

Easily my favorite new tag of the past couple years. Before you go home for the night (or otherwise stop working, if you’re already home), drop one of these wherever in your code you’re currently working, followed by a one-paragraph braindump of your current thought context.

Example:

/*

YOUAREHERE The performance here kinda sucks, but I'm not quite sure

why yet. The initial profile indicated that accessing the frobnitz

via the foobar interface is taking 5 ms, but we're barely doing

anything in there, or at least we're not supposed to be. Need to

dig into foobar a bit more next, see what's going on there; check

with Greg if there's a known issue first.

*/

Just write a quick summary of what you just did, what the current issues are that you’re thinking about, and what your probable next step should be. Usually around 5-10 lines of comment text should be sufficient. Save the file (don’t check it in! We should all know to avoid end-of-day check-ins anyway; YOUAREHERE doesn’t change that), and then once you’ve saved it… walk away. You can do unrelated stuff like check email or whatever, but don’t mess with any more code for the day once you write your YOUAREHERE comment (if you do then you’ll have to rewrite it later, so by definition the YOUAREHERE comment only works if it’s the last comment of the day).

Doing this helps you disconnect from work, so you can fully engage in other parts of your life (family, hobbies, etc.) and then get a good night’s sleep. I’ve found that I am much less likely to have my brain endlessly (and usually unproductively) spinning on a work problem in the middle of the night if I’ve left a YOUAREHERE comment, because a lot of that spinning is driven by subconscious worries about forgetting my thought context. When that context is preserved, those worries go away. Moreover, by keeping my mind from excessively ruminating on a problem, I allow the right-side of my brain to work on the problem more freely in the background (often providing better results spontaneously) while I’m away from my desk.

The next day when you get into work and are ready to start dealing with code again, open up your editor (if it’s not open already), find your YOUAREHERE comment, read it thoroughly, and spend a minute or so letting all the relevant context page its way back into your mental cache. Afterwards, you’ll feel like you’re “right where you left off” and can safely delete the YOUAREHERE comment, and carry on. Very handy!

TESTTEST

Doing a code search for “TEST” comes up with way too many hits, but “TESTTEST” is considerably more rare. Use this for temporary test code that you don’t need/want to turn into a more permanent unit test, you just want to quickly verify that something basically works. Having a special tag like this reduces the likelyhood that you’ll accidentally check this stuff in, since you can spot it quickly during a pre-check-in review).

VOODOO

Best used in combination with FIXME or HACK, the VOODOO tag conveys an extra sense of fragility that needs to be respected. VOODOO code is code that ostensibly works, but for the time being you don’t necessarily know why (even though you wrote it!), and for that reason it’s not to be trusted. You need to take these code blocks seriously when working in their vicinity, and any attempted direct fixes should be done with extreme care.

Ideally VOODOO blocks don’t last very long (we should all completely understand the code we write, yes?), but code doesn’t work in isolation; it often calls into other code which is potentially insufficiently documented or understood (especially problematic if it’s closed source).

I’d be lying if I told you that every one of these VOODOO blocks gets fixed before ship. Nobody should want to remain ignorant, but once we get into areas like weird graphics driver behavior, these kinds of situations do come up. Having a dedicated tag like VOODOO indicates that the ability to provide a true fix may be beyond the boundary of available knowledge, and that one should tread carefully as a result.

Used very sparingly, for obvious reasons. Junior engineers using VOODOO should try and clear them out with a more experienced engineer before checking in code with them. Senior engineers using them should try to find an appropriate middleware or hardware DevRel to talk to.

Note: Never, ever use VOODOO in connection with multithreading related code (i.e. VOODOO that “fixes” a deadlock etc.). That’s not VOODOO code, it’s a time bomb.

WART

Old code that is being preserved “for reference”. If you have a usable version control system (you do, don’t you?) you should clear WARTs out before check-in, unless it’s combined with another tag like TODO or FIXME indicating a potential loss of functionality that you intend to regain soon. I need to remember to use this one more often. 🙂

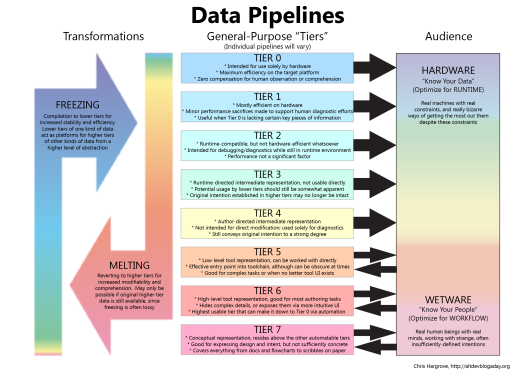

Ranked FIXMEs

This is a variant on our old friend the FIXME. A number of years ago I noticed my friend Scott Bilas would use sequences of dollar signs in his comments ($, $$, $$$, …) to indicate things that needed attention; more dollar signs meant a higher priority.

I liked the principle, but having the dollar signs alone was a little bit less context than I cared for. So I started combining it with FIXME, resulting in comments like this (note that the “CDH” is just my initials; I have a personal rule of attributing my FIXMEs):

// CDH FIXME$$$ data needs to be sorted to be used more easily in editor

Again the number of dollar signs is based on subjective priority, but my (very rough) ranking has been something like this:

- FIXME$ : Don’t really care that much, I doubt I’ll ever be idle enough to fix these, but it’s good to make a note.

- FIXME$$ : Eh, this actually may need some attention at some point, but it’s probably not too pressing.

- FIXME$$$ : This probably needs to get fixed before ship.

- FIXME$$$$ : Should be fixed before the next milestone.

- FIXME$$$$$ : You should fix this before checkin.

- FIXME$$$$$$ : Fix this today.

I’d be lying if I said I held to this strictly (there are some FIXME$$$$$ I’ve written that have been checked in and probably still survive months or years later), but I attribute that more to my own imperfect-but-slowly-improving ability to recognize an appropriate priority for these issues, rather than an inherent deficiency in the ranking concept itself.

In any case, it’s nice to be able to search for “FIXME$$$” and get only FIXMEs ranked three-dollar-signs or higher. By the way, I use dollar signs because I work primarily in C++, where $ is infrequently used. For other languages that do use it (like Perl etc.), you’re better off choosing another symbol with equivalently rare use in the code itself.

Enough For Today

Got some good tags of your own? Share them! 🙂